How do we make great language models for smaller languages such as Icelandic?

March 12, 2024I believe that to develop a good language model, the tokenizer must be great.

Currently, the architecture of language models such as GPT and BERT depends on tokenization. Before the training of language models all text must be given a numerical representation, or a respective token. The act of converting text into tokens is called tokenization, and the algorithm that performs tokenization is called a tokenizer.

If the training data is poorly tokenized it becomes difficult for the language model to converge. Therefore, to develop a good language model, the tokenizer must be great.

The question is: how good are the BPE tokenizers of state-of-the-art generative language models for Icelandic and what can we do to improve them?

To evaluate this, I measured the performance of three different tokenizers. Here are the results:

Qualitative Analysis

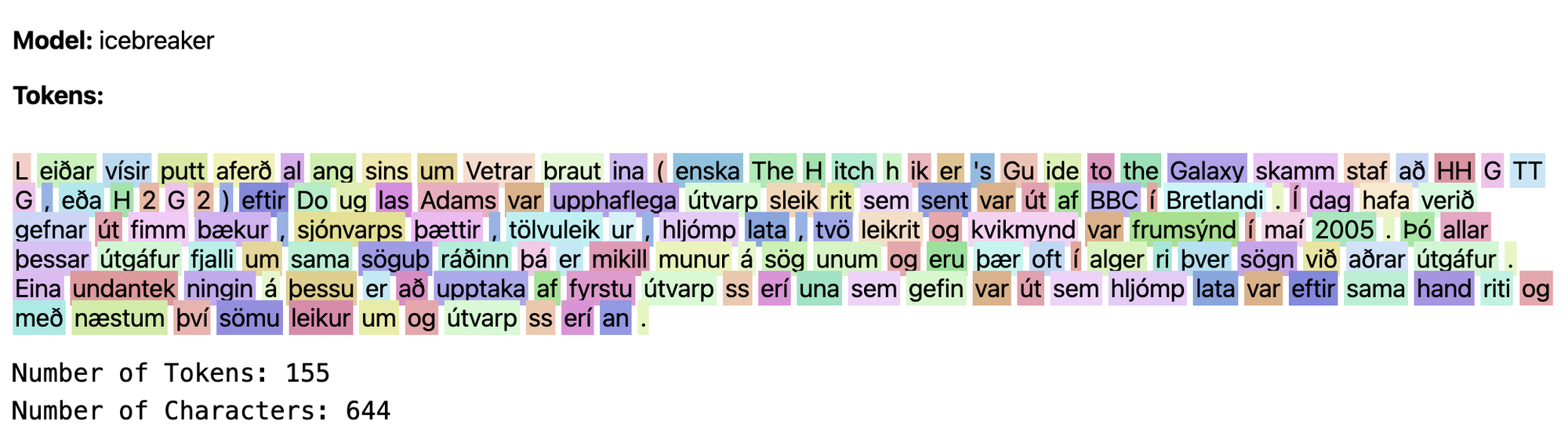

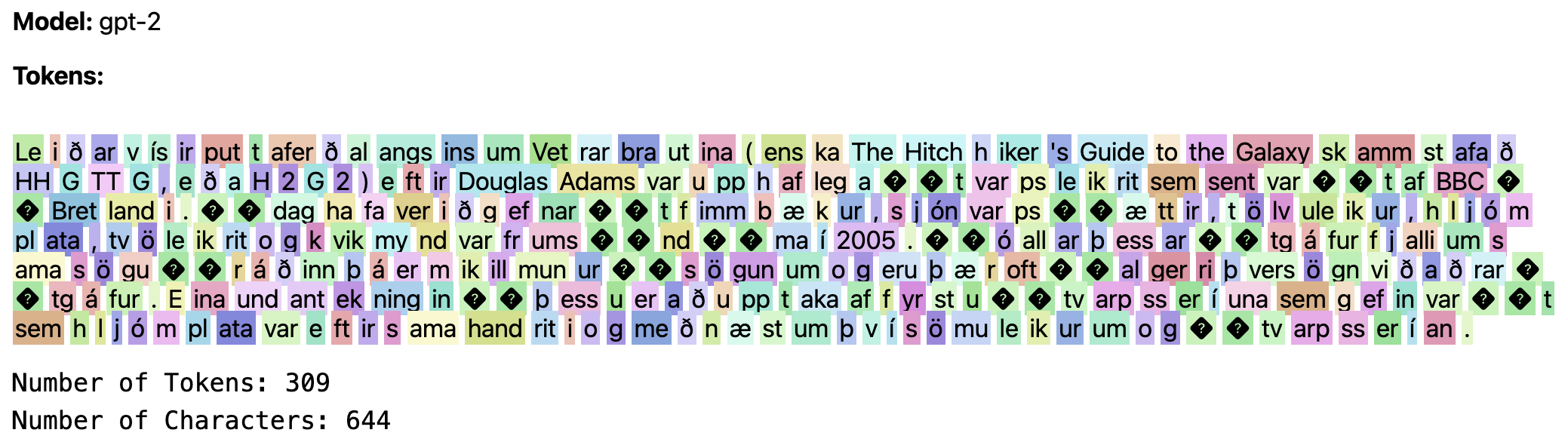

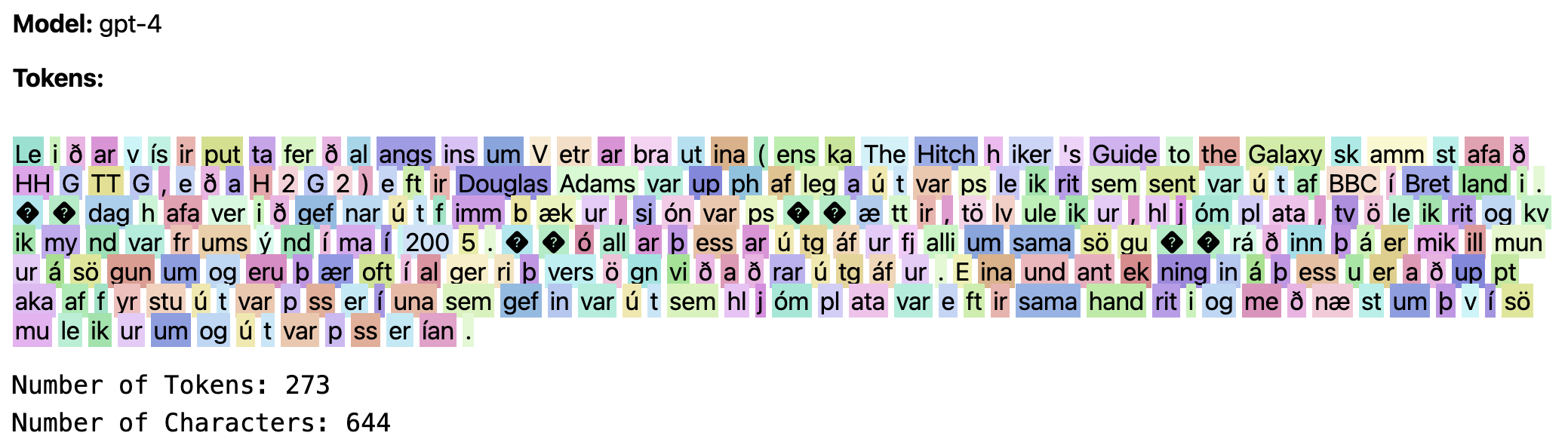

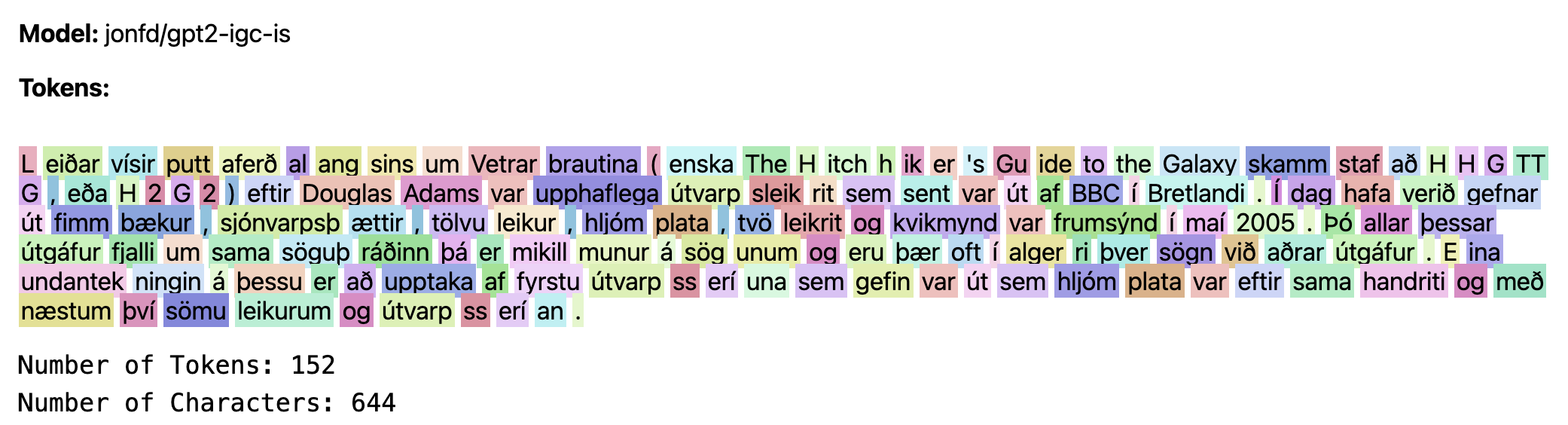

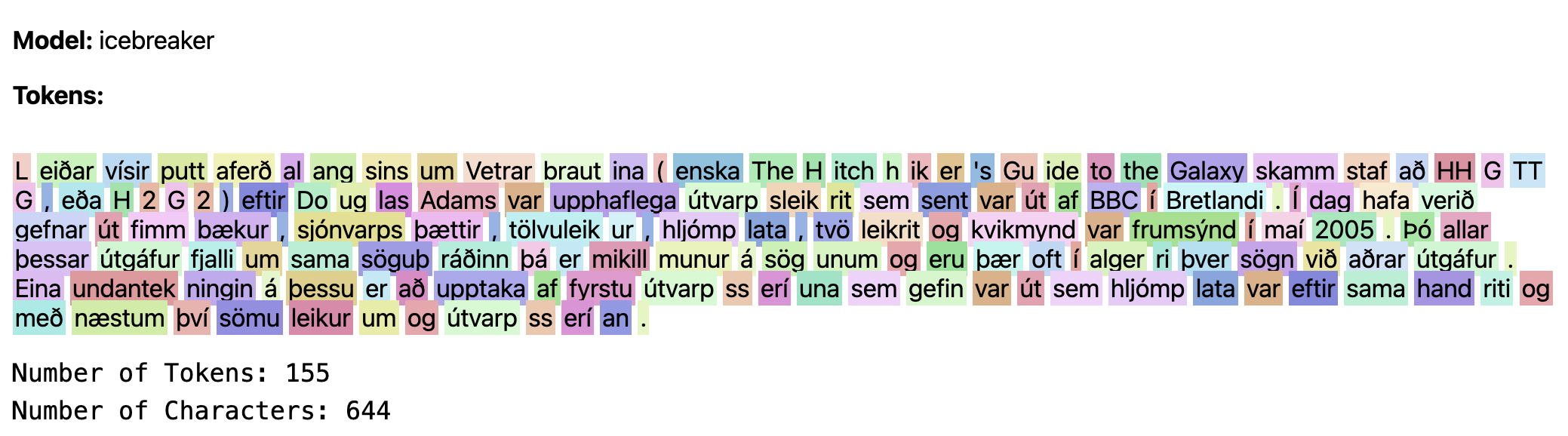

I tokenized the first paragraph (644 characters) from the Icelandic Wikipedia page on "The Hitchhiker's Guide to the Galaxy." Each token is represented by a unique color. Ideally, each token should encapsulate meaningful information, which means selecting an appropriate token length and sensibly dividing longer words into multiple tokens.

The GPT-2 tokenizer struggled with the text, using 309 tokens to represent the 644 characters. The token lengths seemed arbitrary, and it handled longer words poorly, which is expected since it was primarily trained on English.

The GPT-4 model, being a state-of-the-art multilingual language model, showed better performance in Icelandic, using 273 tokens for the same text. It managed to represent words like "sama" and "oft" as single tokens, which is advantageous. However, it still required multiple tokens for some shorter words.The tokenizer developed by Jón Friðrik Daðason, named gpt-2-igc-is, was specifically trained on Icelandic text and demonstrated superior performance, requiring only 152 tokens. This tokenizer efficiently handled both small and large words, a testament to its training on Icelandic text.

I could only find one tokenizer which was explicitly trained on Icelandic text, it was made by Jón Friðrik Daðason and is called gpt-2-igc-is. His tokenizer represents the 644 characters with 152 tokens. Significantly less than the GPT-4 tokenizer! The gpt-2-igc-is tokenizer, tokenizes small words as single tokens, and can handle large words. This is expected since it was solely trained on Icelandic texts.

Quantitative Analysis

I used "Laxdæla Saga," a text with a rich vocabulary of 6,064 unique words, to compare the tokenizers further. The gpt2-igc-is tokenizer had the lowest tokens per word (1.34) and tokens per character (0.25). The GPT-4 tokenizer followed, with 2.46 tokens per word and 0.45 per character. The GPT-2 tokenizer was the least efficient, with 2.82 tokens per word and 0.52 per character.

Discussion

Now, it has been established that the performance of tokenizers vary greatly. The GPT-2 tokenizer which was trained mostly with English texts performed badly on Icelandic texts. GPT-4 which is trained on a multilingual dataset, performed better. But, the gpt2-igc-is tokenizer which was solely trained on an Icelandic dataset performed the best. This is to be expected. The question then becomes: how can we improve upon the gpt2-igc-is tokenizer?

Introducing Icebreaker

One important detail we have not discussed yet is the vocabulary size of the tokenizers. Vocabulary size is as we might expect, how many unique tokens the tokenizer has available. Tokenizers with small vocabulary sizes have fewer unique tokens, and thus need many tokens to represent short texts, while those with a large vocabulary size need fewer tokens to represent text. The vocabulary size of the tokenizer also effects the language model. Generally it takes longer time or more iterations for language models to converge if the vocabulary size of the tokenizer is large. Hence, tokenizers with smaller vocabulary sizes are favored, if we have limited computing power. As a rule of thumb: a monolingual language model rarely requires a tokenizer with a greater vocabulary size than 50 000. The GPT-2 tokenizer and gpt2-igc-is tokenizer have a vocabulary size of 50 257 and 51 000 respectively.

I developed a new tokenizer named Icebreaker. It is trained on the same data as gpt2-igc-is tokenizer but the vocabulary size is 6% smaller. It still performs approximantly as well as gpt2-igc-is tokenizer despite the slight reduction in vocabulary size.

After performing the same qualitative test again the results showed that it needed three more tokens to represent the text in the article than the gpt2-igc-is tokenizer. And remarkably the Icebreaker tokenizer learned to represent compound words, and the article of different words. For example "Vetrar-braut-ina", "sjónvarps-þættir" "tölvuleik-ur" and many more, unlike the gpt2-igc-is tokenizer. The slight reduction in the vocabulary size greatly improved the tokenization!

Quantitative tests confirmed Icebreaker's efficacy, requiring 1.40 tokens per word and 0.26 per character, closely mirroring gpt2-igc-is's performance.

Conclusion

In conclusion, this article demonstrated the significant impact of tokenizer quality on the development of effective language models, particularly when dealing with languages other than English, such as Icelandic. The comparison among three different tokenizers; GPT-2, GPT-4, and the Icelandic-specific gpt2-igc-is highlighted that tokenizers designed with a focus on a specific language significantly outperform more general ones. The gpt2-igc-is tokenizer, which was exclusively trained on Icelandic text, showed superior performance in both qualitative and quantitative tests compared to its counterparts.

I introduced a new tokenizer, Icebreaker, to highlight the importance of optimizing vocabulary size. Despite having a 6% smaller vocabulary than the gpt2-igc-is tokenizer, Icebreaker demonstrated comparable performance, showcasing efficient tokenization of compound words and a more refined handling of the Icelandic language. This suggests that a well-designed tokenizer with a tailored vocabulary can achieve excellent performance without necessitating a vast number of unique tokens.